All you need to know about your products!

| 3DNews Vendor Reference English Resource - All you need to know about your products! |

||||||

|

||||||

|

|

||||||

MSI GeForce FX 5700 Ultra/NonUltraDate: 07/05/2004

By: Introduction

There has passed quite a while since we tested the NVIDIA GeForce FX 5700 Ultra reference board. And now we are going into tests of NVIDIA GeForce FX 5700 Ultra cards which have been batch-released by now. In our lab, we have also got the non-Ultra version of the board built on the NVIDIA GeForce FX 5700 Ultra chip. As always, NVIDIA's Ultra and non-Ultra chips make no difference except the clock speeds. Russian users have long been notable for their eagerness to overclocking and whatever tricks allowing to squeeze out some extra fps "from the air". Buying a non-Ultra/non-Pro video card with an eye toward further overclocking if not to its "Ultra" big brother but at least up to that mark is a thing albeit risky but giving several advantages if you know the specifics of the chips manufacturing technology and the final prices for Ultra and non-Ultra solutions by graphic card manufacturers. That's why were very keen to explore all the ins and outs of the MSI FX5700-VTD128 video card built on the NVIDIA GeForce FX 5700 chip and compare it to its Ultra modification (MSI FX5700U-TD128), as well as to direct competitor products by ATI. And at just that, many disputable points may come up. Which ATI solution is the NVIDIA GeForce FX 5700 positioned against? While things are clear and distinct with NVIDIA GeForce FX 5700 Ultra and ATI Radeon 9600 XT - they are direct competitors (although the price factor to date is not in favor of the ATI product in terms of the Russian market realities), the situation with NVIDIA GeForce FX 5700 is not that straightforward. At first glance, the product should be positioned against ATI Radeon 9600. However, we'll proceed first from the pricing factor. And at that, not all is so bright for NVIDIA. Comparing the average prices (at www.price.ru) for video boards based on varied chips by various manufacturers - ATI Radeon 9600, ATI Radeon 9600 Pro, ATI Radeon 9600XT, NVIDIA GeForce FX 5700 and NVIDIA GeForce FX 5700 Ultra, we get quite an amusing picture. The average prices for NVIDIA GeForce FX 5700 and ATI Radeon 9600 Pro are about the same. On the other hand, we can't set off NVIDIA GeForce FX 5700 against ATI Radeon 9600 proceeding from the pricing factor, because the product based on the NVIDIA chip is more expensive. So we built our today's review just proceeding from the pricing factors (for details, see the tests section). We were also curious to look at the MSI product line around which there are so many rumors to deal with the manufacture of boards built on ATI chips. For now, we can only observe the boards built on NVIDIA chips only:

Today, we'll be dealing with tests of two video cards of the middle pricing range: FX5700Ultra-TD128 and FX5700-VTD128. NVIDIA GeForce FX 5700 and NVIDIA GeForce FX 5700 Ultra ChipsIn our roundup table, we gathered characteristics both for NVIDIA GeForce FX 5700 and NVIDIA GeForce FX 5700 Ultra chips, as well as for their predecessor - NVIDIA GeForce FX 5600 Ultra, and for their Middle-End competitor cards built on ATI chips - ATI Radeon 9600XT.

As we can see, the NVIDIA GeForce FX 5700 chip differs from NVIDIA GeForce FX 5700 Ultra in only the frequencies (which in fact is the way that should be with Ultra and non-Ultra modifications of NVIDIA boards). The frequencies of NVIDIA GeForce FX 5700 make up 425 MHz / 275 MHz (550 DDR) versus 475 MHz / 450 MHz (900 DDR) in NVIDIA GeForce FX 5700 Ultra. Note that the memory clocking in NVIDIA GeForce FX 5700 is much more understated rather than the core clocking. This is attributed mostly to the fact that boards built on the NVIDIA GeForce FX 5700 chip should have been made cheaper as opposed to quite costly to manufacture graphic boards built on the NVIDIA GeForce FX 5700 Ultra chips with the DDR-II memory, which can't be done without replacing the DDR-II memory and simpler wiring of the board, which results in appropriate installations of slower memory chips. In the part of our material dealing with the analysis of the PCB of the NVIDIA GeForce FX 5700, we'll give more focus to the point. As the memory clocking dropped, so did the memory bandwidth (128 bit / 8 * 2 * 275 MHz = 8,8 GB/s), which in view of the not so understated core clocking can't make up a problem of memory bandwidth lack. Nevertheless, let's see what synthetic benchmarks will show for that. MSI FX5700U-TD128 Video Card Features Package bundle: Package bundle:

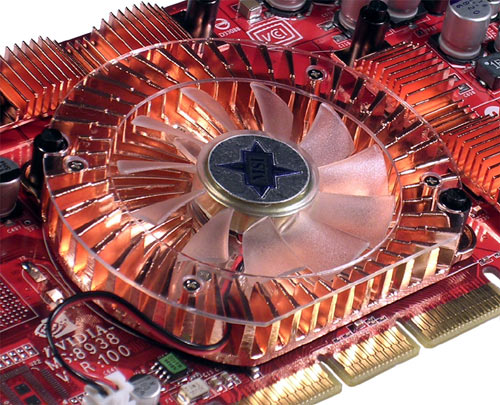

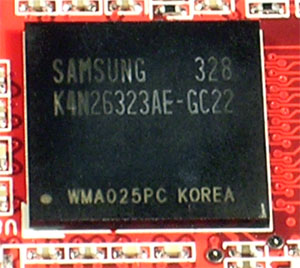

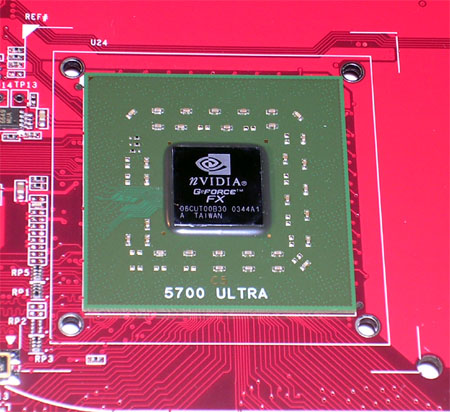

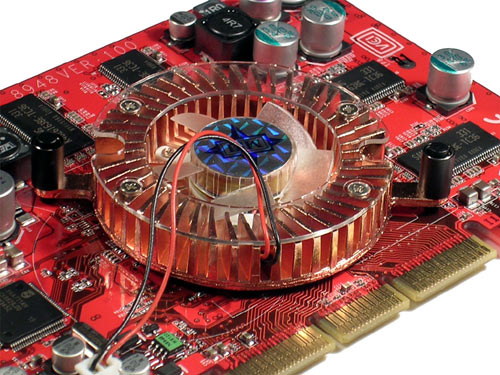

The retail bundle is traditionally rich, which is normal for MSI. In some ways, MSI is even better at that than ASUS. The only thing what we'd like to see from MSI in the nearest time is the updated bundle in the part of supplied games selection. Clearly, the company is reluctant to pay much of royalties, but to date the games shipped in the package bundle of MSI FX5700U-TD128 can no longer be regarded as novelties and, unless the company takes enough care about the bundled software updates for the product line in the nearest future, it may run the risk of losing to its competitors. Design and layoutThe MSI FX5700U-TD128 is fully based on NVIDIA's reference design. All the components and power cells are positioned strictly in accord with the reference. But the board's cooling system, albeit different from the reference, offers no radical changes to it.  The board is made on a bright red textolyte PCB, offers 128 MB DDR memory onboard, AGP 2x/4x/8x interface, and a standard set of outputs: one analogous, one digital, and one TV-OUT. The TV-OUT functionality is implemented with the NV36 chip itself. No VIVO functionalities are provided onboard, but instead of that the pad for a VIVO chip is wired (normally, a Philips SAA7108AE chip should be there) , but no chip is installed over there.  The video card is equipped with 128 MB DDR memory packaged in 8 chips (4 chips on each of the sides - front and rear) within the advanced BGA packaging, with the 128-bit memory bus. The memory is produced by Samsung (K4N26323AE-GC 2.2), offers 2.2 ns access time, which is equivalent to approximately 450 MHz (900 MHz) of memory operation at which the memory is running. The graphic chip also runs at 475 MHz as per the specifications.  Let's feast our eyes with the graphic chip NVIDIA GeForce FX 5700 Ultra itself. As can be seen on the marking, the chip was manufactured on the 44th week of the year 2003 and is of revision A1.  The socket for additional onboard power is wired in precisely the same way as on the NVIDIA reference board. The cooling system does not offer any extra "luxuries". The memory chips are covered from the front and rear sides of the PCB with small golden radiators. The nice thing is that MSI decided not to follow the way of many manufacturers with its board built on the NVIDIA GeForce FX 5700 Ultra chip who recently are very keen to fitting memory radiators on the front side only, for "cosmetic" purposes, so to say :). The cooling of NV36 graphic chip is implemented through fitting a sizeable radiator blown around by a no less massive fan. The NV36 chip does not offer so much power consumption like NV38 does, so this solution of MSI engineers seems to be quite sufficient. If we recall the cooling system used on the NVIDIA reference board, this conclusion is even more supported. MSI FX5700-VTD128 Package bundle: Package bundle:

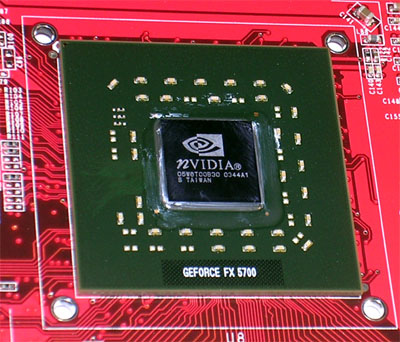

The package bundle essentially does not differ from that for the FX 5700 Ultra version of the MSI graphics card, expectedly. We were able to see something really genuine in the package bundle only in MSI's high-end card built on the NVIDIA GeForce FX 5950 Ultra chip. . Design and layoutThe MSI FX5700U-TD128 is fully based on NVIDIA's reference design. Note that the board's layout has been considerably simplified relative to NVIDIA GeForce FX 5700 Ultra. The PCB component layout resembles more NVIDIA GeForce FX 5600. This can be easily explained by the much lower operating frequencies of FX 5700 relative to the Ultra modification of the board, as well as by the use of regular DDR-1 memory (remember that NVIDIA GeForce FX 5700 Ultra uses expensive DDR-2 memory sensitive to heat emission). The board offers MSI's traditional bright red color of the PCB, has 128 MB DDR memory onboard, the AGP 2x/4x/8x interface and a standard set of VIVO outputs: one analogous, one digital, and one VIVO port. The TV-OUT functionality is implemented with the NV36 chip itself. The implementation of VIVO onboard functionality is made through a Philips SAA7108AE chip traditional for NVIDIA boards.  The video card is equipped with 128 MB DDR memory packaged in 8 chips (4 chips on each of the sides - front and rear) within the regular (TSOP) packaging, with the 128-bit memory bus. The memory is produced by Samsung (K4D261638E-TC 3.6), offers 3.6 ns access time, which is equivalent to approximately 275 MHz (550 MHz) of memory operation at which the memory is running. The graphic chip also runs at 425 MHz as per the specifications.  Let's feast our eyes with the graphic chip NVIDIA GeForce FX 5700 Ultra itself.  As can be seen on the marking, the chip was manufactured on the 44th week of the year 2003 and is of revision A1.  The cooling system does not offer any extra "luxuries". For memory chips, no cooling is provided. It is not needed for the slow 3.6 ns memory. The cooling of NV36 chip is implemented through fitting a middle-sized fan. The cooling system cooling does a simply fantastic job. During the many hours of 3D tests (including those in the overclocked mode) gave no reasons for doubts. The board ran through all the tests without an issue: TestsIn our today's test session, we used 4 cards altogether. We have already touched upon the issue of positioning middle-priced boards in our introduction, but it's high time we came back to this matter. Proceeding from the current situation on the market, we can safely say that NVIDIA GeForce FX 5700 should be positioned versus ATI Radeon 9600 Pro. It's just the prices for boards built on these chips which are on par. NVIDIA GeForce FX 5700 Ultra is also positioned versus ATI Radeon 9600XT, although the pricing factor is definitely not in favor of the former. And this should be taken into consideration when looking at the results for video cards built on the NVIDIA GeForce FX 5700 Ultra chip. Therefore, today we'll be testing the following video cards:

MSI FX5700-VTD128 vs Sapphire Radeon 9600 Pro, MSI FX5700U-TD128 vs ASUS Radeon 9600XT, respectively. Test configuration:We have finally upgraded our test configuration. Now it is a quite powerful system based on AMD Athlon XP 3200+ Barton (200x11 = 2200 MHz).

We remove all the decorative "niceties" out of the Windows GUI and set the operating system to maximum performance. Disable the Vsync forcedly via the drivers both in OpenGL and in Direct3D applications. The S3TC texture compression was also disabled. Test software:

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Video card | Core/memory Max |

| MSI FX5700-VTD128 | 480 MHz / 665 MHz |

| MSI FX5700U-TD128 | 545 MHz / 1030 MHz |

| NVIDIA GeForce FX 5700 Ultra reference | 570 MHz / 1080 MHz |

We can't tell anything regarding the general overclocking potentials of video cards built on the GPU NVIDIA GeForce FX 5700, since it is the first board built on this GPU that we tested. However, the board demonstrated simply fantastic overclocking results relative to the nominal.

But MSI FX5700U-TD128 gave an impermissibly small overclocking margin relative to NVIDIA GeForce FX 5700 Ultra reference. However, as the practice showed, very few cards are able to compete with NVIDIA reference at that. So there are no reasons to talk about the low overclocking potential of MSI's video cards built on the NVIDIA GeForce FX 5700 Ultra chip. We'll be able to tell something specific only after having run a number of tests with production samples of video cards built on the NVIDIA GeForce FX 5700 Ultra chip made by various manufacturers. But for now we can only establish quite good overclocking potentials of MSI FX5700U-TD128 relative to the nominal :)

Well, it's good to analyze the overclocking of boards, but we are reminding it for the hundredth time that overclocking is not a compulsory feature of a video card, it may vary from sample to sample, and we can't be responsible for the equipment that went unusable after overclocking.

Following the tradition, we start with our substantially amended benchmarking package.

Deja-vu? The results of this synthetic benchmark that analyses the execution speed of various shaders may have made a dry mouth in this regard. It's been long known that version 2.0 shaders are executed on NVIDIA GeForce FX quite poorly and hardly can help much but for the close cooperation with game developers who would optimize their engine code specially for the NVIDIA architecture, which is being successfully done. The results are self-explanatory if we recall our recent tests with FarCry which are part of NVIDIA initiative dubbed "The way it`s meant to be played". We can see that boards on NVIDIA chips lagged behind ATI cards quite a bit. Anyway, repeating it again that such an approach is extensive by its nature, and NVIDIA can radically improve its positions only through release of a new chip.

For now we can merely ascertain sometimes a three-fold lag of NVIDIA chips at that test relative to ATI chips of similar positioning.

This test allows assessing the speed at which the geometry is processed by the accelerator. The test allows to choose the following illumination models (computed at the vertex level):

We used the most advanced mode with three diffuse-specular light sources in combination with three different operating modes: the traditional TCL (Fixed-Function Pipeline), version 1.1 vertex shaders and version 1.1 pixel shaders, version 2.0 vertex shaders and pixel shaders, as well as the simplest mode of ambient illumination is also in combination with the three operating modes: the traditional TCL (Fixed-Function Pipeline), vertex shaders 1.1 and pixel shaders 1.1, vertex shaders 2.0 and pixel shaders 2.0. In the case of Ambient illumination (the simplest constant illumination) and transformation we reach the practical limit for the card's bandwidth at processing the triangles.

Let's look at the test results. So, the first case deals with the simplest mode using ambient lighting in combination with three operation modes: the traditional TCL (Fixed-Function Pipeline), vertex shaders 1.1 and pixel shaders 1.1, vertex shaders 2.0 and pixel shaders 2.0. Despite the greater number of vertex processors in NVIDIA chips (in NVIDIA GeForce FX 5700 and NVIDIA GeForce FX 5700 Ultra they are 3, while in ATI Radeon 9600 Pro and ATI Radeon 9600 Pro they are merely 2), the processing of geometry in the case of the simplest lightning source the NVIDIA chip does better. The results do not differ during the switching from hardware T&L emulation to the use of version 1.1 and 2.0 shaders. At that, ATI Radeon 9600 XT is an undisputable leader. All is natural in that the results produced in the ambient lighting mode depend to greater extent on the clock speed of the graphic chip which is the highest of all the accelerator presented herein (i.e. in ATI Radeon 9600XT). The NVIDIA GeForce FX 5700 chip is definitely not bright in terms of geometry and thus takes the last place.

Now we are moving from the simplest lightning mode towards the most advanced model with three diffuse-specular light sources. Here the picture radically changes in various modes and requires additional explanation. In the Fixed-Function Pipeline mode, we see a considerable leadership of NVIDIA boards. This is quite a natural victory of NVIDIA chips in that ATI chips simply have no hardware support for T&L emulation, but NVIDIA chips offer the implementation of hardware units responsible for light source computation.

With the transition to version 1.1 vertex shaders, the results for NVIDIA show a significant two-fold drop in their peak values. And finally here comes the most exciting thing - version 2.0 shaders: ATI chips take a lead! It is quite natural in that execution of any functions on the base of version 2.0 pixel and vertex shaders on NVIDIA chips runs strikingly slower than with DX 8.1 version shaders. At that, flaws in the NVIDIA GeForce FX architecture make themselves felt.

This test performs a number of various tasks, but we were mostly interested in the possibility of measuring the performance of frame buffer filling. We used two schemes in this test: both with a 256x256 texture, and without such.

The values of frame-buffer fill-rate produced in this test with the ATI chip do not coincide a bit with the theoretical maximum announced by ATI, but with NVIDIA everything is almost practically correct for the case of the Texelrate (Color+Z) mode.

In this test, the 4x1 NVIDIA GeForce FX 5700 Ultra as well as NVIDIA GeForce FX 5700 architecture are evident, which shows, as was noted above, that the results fully match this configuration of the number of pixel processors and texture units jointly with the chip's clock speed. The peak values differ from the theoretically admissible by merely 40-50 mln pixels per second, which in view of the produced absolute values can be regarded as a minor error. However, in this test ATI chips do not reveal their theoretically announced pixel fillrate bandwidth. For example, in the ATI Radeon 9600XT chip, declared was 500х4 = 2.0 Gpixels/s, but in fact we get merely 1.1 Gpixels/s, which is clearly twice as less than the theoretical maximum. We can't tell the cause of such behavior of ATI chips, however, it's not the first time already, since during tests of ATI Radeon 9800XT we produced exactly the same queer results.

In the case of adding just one more bilinear texture sampling, the peak values of pixel fillrate dropped, but the general picture remained as before.

This test in the D3D RightMark benchmarking package allows to estimate the performance of executing various pixel shaders of the second version. In this test, the geometry has been substantially simplified to minimize the dependence of results of the test on the geometric performance of the chip and verify the operation of pixel pipelines only. We've brought in the operational modes for both 16-bit and 32-bit floating-point precision (switching between the precision modes is topical for NVIDIA chips only).

NVIDIA chips proved shattered at performing version 2.0 shaders under 32-bit floating-point precision. Yes, we can make reservation for that the ATI chip was running that time at its customary 24-bit precision, but let's look at the fps values produced with 16-bit floating-point precision. In this case, the NVIDIA chip is in a vantage point, since it uses less precision rate than the ATI chip (whose absolute values, as expected, do not change - it runs at its invariable 24-bit).

Yes, the fps values in NVIDIA chip went up expectedly, but in the end it is again a fiasco. By the results of the test, NVIDIA chip executes pixel programs twice as slower than ATI chips do.

This test is aimed at revealing the accelerator speed at displaying point sprites. Let's enumerate the adjustable test parameters:

In the test settings, we used 2 diffuse light sources and enabled animation. We also investigated the dependence of execution speed on displaying point sprites on the version of vertex shader used.

In the total, NVIDIA chips are definite outsiders at that. See for yourselves: NVIDIA GeForce FX 5700 Ultra shows results precisely on par with ATI Radeon 9600Pro with which NVIDIA GeForce FX 5700 should have competed, but it lagged in this test quite substantially (the low clock speeds must have played a bad trick at that).

This test allows to estimate the efficiency of removal of hidden points and primitives by the accelerator. The randomly generated scene will then be displayed in one of the three selected modes:

Also, we can investigate the dependence of the efficiency of hidden points and primitives removal efficiency on the version of vertex shader used (1.1 or 2.0).

The NVIDIA GeForce FX 5700 Ultra chip is beyond competition. The NVIDIA GeForce FX 5700 also ranks pretty good at that, however, in this test our settings play in favor of NVIDIA chips - we perform texture sampling, which can't give us 100% adequate results when estimating the efficiency of the chip's HSR unit. In the nearest future, this part of our analysis of accelerator operation will be complemented with one more HSR efficiency estimation mode in order to produce a clearer idea in this regard.

The version of vertex programs hasn't brought any changes to the alignment of forces.

The well-known Codecreatures benchmark shows a definite leadership of MSI boards built on NVIDIA chips. NVIDIA optimizations added to the good characteristics of the chips NVIDIA GeForce FX 5700 and NVIDIA GeForce FX 5700 Ultra give a not bad result at all - the NVIDIA GeForce FX 5700 Ultra proves to be an indisputable leader, and NVIDIA GeForce FX 5700 shows results on par(!) with ATI Radeon 9600XT.

Tests run with the antialiasing hasn't brought anything new into the alignment of forces among the boards.

But in view of the implementation specifics observed in the AF algorithms used for NVIDIA chips, the alignment of forces changes a bit, and ATI Radeon 9600XT finally overtakes the MSI's board built on the NVIDIA GeForce FX 5700 chip.

We are traditionally tampering with 3DMark :). There were many times when got reasons to doubt the evidence of this benchmark, so we'd better not judge once again who was right or wrong at that benchmark, but will simply present the results for this comprehensive package just for general information only :).

From synthetic applications, we are now moving on to analyzing the performance of the graphic boards in real gaming applications.

Well-optimized for the NVIDIA architecture, the game demonstrates an undisputable leadership of the MSI board built on the NVIDIA GeForce FX 5700 Ultra chip and the so needed leadership of the board based on the NVIDIA GeForce FX 5700 chip over ATI Radeon 9600 Pro.

The antialiasing mode does not violate the general alignment of forces.

Nor has anisotropy affected the alignment of forces, and the leader is the same - MSI FX 5700 Ultra.

Therefore, AA+AF combined modes show the same alignment of forces, no surprises have been found.

As we remember, the geometric performance of the NVIDIA GeForce FX 5700 Ultra chip for the case of using version 1.1 vertex shaders with the most advanced lighting mode used was higher than the similar parameter in the ATI Radeon 9600XT chip. Looking at the results for Unreal 2, you can clearly see that the synthetic results have been embodied in a real-world gaming application. In this benchmark, much depends just on the geometric performance of the accelerator, and since version 2.0 vertex shaders so unloved by NVIDIA accelerators are little used, the MSI card based on the NVIDIA GeForce FX 5700 Ultra chip takes a lead in this test in which similarly ranking ATI boards had traditional leadership.

At this OpenGL-application with the revamped engine for Quake 3: Arena, the MSI boards built on the NVIDIA GeForce FX 5700 Ultra chip takes a lead, but the MSI board built on the NVIDIA GeForce FX 5700 chips demonstrates a very poor ranking relative to ATI Radeon 9600 Pro.

But "image quality improvement technique" give the NVIDIA GeForce FX 5700 Ultra video card a second wind - at some points, it even shows itself much better in this mode than even a board built on the ATI Radeon 9600 Pro chip.

The "Ultra Shadow" technology implemented in NVIDIA chips allow them easily bypassing ATI boards in this test. Due to this technology, stencil shades whose rendering technology is widely used in the game are built faster. Similar effects will be used in the forthcoming DooM III, so make your own conclusions, folks =).

Note that the MSI board shows results on par with ATI Radeon 9600XT, but NVIDIA GeForce FX 5700 Ultra shows even more superiority margin.

Unfortunately, for now we don't have any information on the engine of this benchmark, and can merely ascertain the leadership of MSI board built on the NVIDIA GeForce FX 5700 Ultra chip, as well as complete parity of boards on the base of NVIDIA GeForce FX 5700 chips versus ATI Radeon 9600 Pro.

The benchmark makes active use of version 2.0 shaders, which is seen in the results: ATI boards are leaders at this test.

This is a pseudo-DirectX 9.0 test made on the base of the gaming engine. The game uses version 2.0 vertex programs, but 1.1 of pixel programs. At that, NVIDIA boards feel comfortably enough, despite the unforgivably low operation with DirectX 9.0 shaders which albeit are not used to the full, nevertheless play far not the last part here.

The game uses version 2.0 of pixel and vertex programs. Besides, the game offers wonderful features for testers by allowing forced enablement of version 1.1, 1.4 and 2.0 versions of pixel and vertex programs. However, we gave up using shader versions other than 2.0 in this test quite a long ago, because we are interested in mostly the board performance in DirectX 9.0, and also because we have a number of times proven the performance difference for video cards on the base of ATI chips, as well as NVIDIA chips between versions 1.1 and 2.0 of shader programs.

Traditionally, we note a much higher operating speed of ATI boards in DirectX 9.0 applications.

Tomb Raider engine: Angel of Darkness is a technically advanced DirectX 9.0 solution. The game uses version 2.0 pixel and vertex shaders, which, considering the results of synthetic tests, is not going to bring anything good for NVIDIA boards.

Practice shows quite just this. In scenes that make a most intense use of version 2.0 pixel shaders, the results for NVIDIA boards are simply slashing. All turns better on switching to simpler, non-shader scenes, but nevertheless NVIDIA boards fail to win performance crown.

It is still premature to make conclusions as to a certain accelerator basing on the test results produced with the raw leaked alpha, because much will change by the final release (and we are pretty sure much will be changed). Nevertheless, it won't be a mistake if I say that many readers will be curious indeed to find out about the alignment of forces for graphic chips just in this benchmark.

NVIDIA GeForce FX 5700 failed to cope with the minimum task and lost to its direct competitor - a board built on the ATI Radeon 9600 Pro chip. And that despite the flexible FireStarter engine able to tune itself to the architecture of a certain accelerator (for details, read our review on the ASUS Radeon 9600XT video card). But the MSI board built on the NVIDIA GeForce FX 5700 Ultra chip feels comfortable at it - the greater number of vertex processors and higher operational speeds of the chip and memory make themselves felt. All these impart the board based on the NVIDIA GeForce FX 5700 Ultra chip a leadership in the test that does not use version 2.0 shaders.

In the game scene, the absolute fps values have changed essentially, but the alignment of forces has remained the same.

But the combined AA+AF modes give an radically new alignment of forces. At that, ATI boards are leaders It's hard to say if that is related to the implementation specifics of anisotropy or AA in NVIDIA and ATI or is it something specific to the game, but nevertheless it is a fact that use of image improvement techniques gives more advantages to ATI boards.

FarCry is a bright example of what we can expect from Hi-End boards with support for DirectX 9.0. The beauty demonstrated by even the raw demo (despite that it's been officially released) gives food for thought as to whether Middle-End graphic boards are future-proof enough? =)

For now, let's see the results for NVIDIA GeForce FX 5700 Ultra in this test. Softly speaking, the board is not bright here. This is more like a regularity - with lots of pixel shaders of the DirectX 9.0 generation, GeForce FX boards lag well behind. That is despite the fact that game developers did quite a global work on optimizing the game engine for the architectural specifics of NVIDIA boards, since FarCry is a participant of NVIDIA's "The way it`s meant to be played" initiative.

Regarding the NVIDIA GeForce FX 5700 chip, the findings are not so optimistic as they are for NVIDIA GeForce FX 5700 Ultra. In the current situation that has shaped up by now when prices for boards built on the base of ATI Radeon 9600 Pro and NVIDIA GeForce FX 5700 chips are on par, we can safely claim that NVIDIA GeForce FX 5700 is not 100% worth of its money. Traditionally, NVIDIA boards demonstrate an unforgivably low performance at games which make active use of version 2.0 shaders and are not playable. Albeit NVIDIA offers advantages at old OpenGL and DirectX 8.1 applications, they are quite minor, and in most of tests we ran there is even a complete parity. So, what do we get? Equal prices, approximately the same performance in old applications and tremendous advantage of graphic boards built on the ATI Radeon 9600 Pro chip at shader applications. Nevertheless, it's better to make conclusions on the necessity to buy a card proceeding from the current market situation on the date of purchase. We don't rule out that prices for boards may change and maybe in the nearest time, but let's make conclusions proceeding from today's state of affairs. Regarding the NVIDIA GeForce FX 5700 Ultra chip, we already made conclusions in the material dealing with tests of NVIDIA reference board. To date, we can only make a reservation that also in the case of this board relative to ATI Radeon 9600XT the prices are not in favor of NVIDIA's offspring, but in this case it is compensated partly by good performance (but again insufficient for applications that make intense use of version 2.0 shaders).

As regards the MSI FX5700-VTD128 video card, we again see an excellent (even non N-Box packages by MSI are above all praises), good overclocking potentials and high manufacturing quality. All this equally applies to the MSI FX5700U-TD128 board. But in the end, all will depend on the price for final products on the market/

|

|||||