All you need to know about your products!

| 3DNews Vendor Reference English Resource - All you need to know about your products! |

||||||

|

||||||

|

|

||||||

nVidia GeForce FX5200 (NV34) Video Card ReviewDate: 29/05/2003

NV30 was announced quite a long time ago. But video cards based on the nVidia GeForce FX 5800 (NV 30) chip are too high in price and beyond pocket to many, or the user simply isn't willing to pay extra bucks for speed not so badly needed. Usually, after the release of a flagship model, its cut-down version is then produced, which allows covering all the market sectors. For May 2003, nVidia is producing three chips based on the FX architecture, to be more precise, FX 5600 video cards are to hit the mass sales only next month - just for now its production stores are being piled up. To be absolutely honest, then as we predicted, the NV30 will never be released to the mainstream market and is aimed solely at the professional Quadro product line, where the price doesn't play the decisive part as it does on the consumer market. In the road-maps of all video card manufacturers, the NV30 has been replaced with the NV35. Nevertheless, in our comparative table we did place the NV30... Much water will have flown by the time the NV35 hits the real-life retail. Comparison data for the FX family video cards:

Today we are presenting benchmarks of the nVidia GeForce FX 5200 (NV34) video cards, but in the near future we'll amend that and publish an article to do with the mid-range GeForce FX 5600 (NV31) chip. Distinctions of the NV34 ChipTo date, nVidia is releasing a line of three GPUs of the FX family for all the three market sectors, with each chip produced in two makes: regular and ultra:

As we can see, nVidia has given up using the MX label to designate its Low-end produce. You now can forget about the MX... Making the chip and video card cheaper requires a price to be paid. Here is a list of main distinctions of the high-end NV30 from the NV34. So, what is missing in NV30:

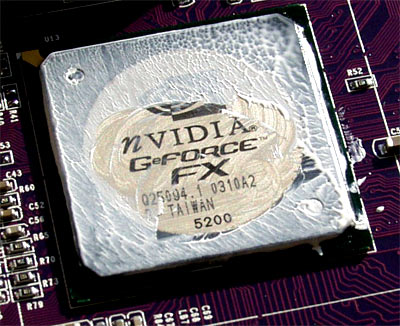

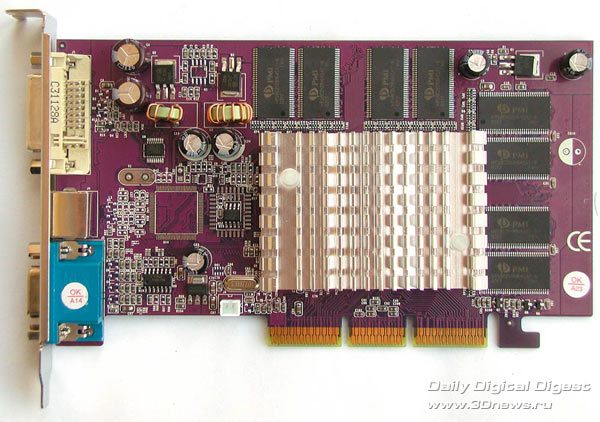

The nVidia GeForce FX 5200 ChipThe chip has 45 mln transistors and is built on the 0.15 mk process technology.  GeForce FX 5200 offers 2 pixel pipelines and 4 texture units. But all this is very relative, because to date you can judge a video card only by the number of pipelines of one type or the other. This is caused by that the driver configures operation of the chip for each particular scene of a computer game. All in all, we can say the 3D handling potentials in NV34 are not different from those in NV30/31. NV34 supports API DirectX 9, thus shaders 2.0 and 2.0+. There are differences of course: the GeForce FX 5200 chip does not offer IntelliSample optimization. The memory interface in GeForce FX 5200 is also different from the higher-end brother - NV30. The chip uses a standard DDR memory controller, which in theory results in essential performance drops, especially with the anisotropic filtering and FSAA enabled. GeForce FX 5200 chips do not have support for the HDTV, but can boast having the integrated TV codec, a TMDS-transmitter and two integrated 350 MHz RAMDACs. But today you are unlikely to surprise anyone with this. For the long period of its development and short life, the GeForce FX 5200 has changed the "recommended" clock speeds for the core and memory at least 10 times. The problem is the first tests of pre-production samples demonstrated a performance so astonishingly low that it would be out of the question and even fatal to launch video cards in such a bad condition on to the market. We'd better refrain from bringing in those first raw results - that simply won't be fair to nVidia, but I can assure you the results were much lower than for MX440. The cause of that was primarily in buggy Detonator drivers not fit to an entirely new chip architecture and really low clock speeds of the new chips. Gradually, things broke even - the standard recommended clock speeds had to be raised, and every new revision of the driver streamlined the performance of video cards, with the yield ratio was bit by bit coming to reasonable technology norms. In our comparison table in the beginning of the article we brought in the "original" values of core and memory clock speeds, but they may prove different in reality which you can see for yourself buying a card in the shop close to you. Video card manufacturers are not shy about varying these values within wide ranges ... Direct competitors of the new chips are Radeon 9000 and 9000 Pro which will be soon replaced with Radeon 9200 and 9200 PRO. Daytona GEF FX5200Video cards of this manufacturer have always stood out with their low price and middling manufacturing quality.   The video card has the AGP 2x/4x/8x interface. The layout is nonstandard, which isn't strange at all, because Daytona video cards feature in their specific layout and design. The cooling is standard, passive and is a needle-shaped mid-sized radiator.  Cooling efficiency on such a chip is rather disputable, since the video card was heating up immensely anyway, and in its overclocking it is desirable the fan be replaced with some more suitable. The memory chips are not covered with radiators.  The video card is equipped with 128 MB memory and offers 6 ns of access time. The memory made by PMI is marked as HP58C2128164SAT-6. That's where the cause of missing memory cooling is - the slow 6 ns memory does not heat up much. The clock speeds of the core and memory in Daytona GEF FX5200 are 250 MHz and 150 (300DDR) MHz, respectively. Warning! The memory operation frequency in this board is lower than it should be as per nVidia's latest recommendations (200 (400DDR) MHz).  The card has a standard set outlets: analogous, digital (DVI) and TV-Out. The TV-out is implemented by the GeForce FX 5200 chip itself, because the TV-Out features are already integrated in it.  It sometimes comes shipped in a box, but the package bundle is like in the OEM version, i.e. the Daytona GEF FX5200 card plus a drivers CD. Benchmarking ResultsUnreal Tournament 2003  FX 5200 lags well behind both to its direct competitor ATI Radeon 9000 and its predecessor - GeForce 4 MX480. ATI Radeon 9000 and nVidia GeForce 4 MX480 demonstrated about the same results.   In the botmatch test, the scores are the same, but the gap between nVidia GeForce FX and ATI Radeon 9000/ nVidia GeForce 4 MX480 has shrunk, although it's not small anyway: 4-8 fps on the average:

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|||||