ForceWare 52.16: NVIDIA's retaliation

By:

Introduction

It's no longer a secret that the pixel and shader performance in NVIDIA's products starting with NV30 has been at an inadmissibly low level, if compared to the performance of ATI's similar products within the same class. While in the synthetic benchmarks like 3DMark 2003 the problems were "solved" quite well with the advent of new drivers and embedded "optimizations", which often deteriorated the image quality or used candidly fraudulent techniques to push up the performance, then with the release of new benchmarks and, most importantly, DirectX 9.0 games that supported pixel and shader programs of version 2.0, the situation for NVIDIA chips was increasingly getting lamentable. We'll be also analyzing the situation with 3DMark 2003 in our today's testing session. It's especially interesting to see the test results for the package in view of the newly released (v340) patch thereto.

Let's recall those new games which have been released so far, and then the testing sessions conducted by numerous editions which revealed a hard to imagine lapse in scenes where pixel and vertex shaders of version 2.0 were intensely used.

"Tomb Rider: Angle of Darkness", "Halo: Combat Evolved", "AquaMark 3", a beta version of "Half-life 2" which "escaped" online just in good time - at all these games and benchmarks built on real gaming engines, NVIDIA boards, softly speaking, were not superior at first glance and even flagship models produced results comparable to ATI's middle-end boards (what is meant it the testing of the Half-Life 2 beta version). That's why a priori NVIDIA boards were rightfully dubbed "slow cards" at DirectX 9.0. We'll try to find out the cause of such results issued by NVIDIA cards in the theoretical section of the review.

Of course, NVIDIA is not sitting idle, and the programmers of this Californian company have released new ForceWare drivers which should eliminate the reproaches regarding the low shader performance in the NVIDIA NV3x family (by the way, 9 months ago, at the past CeBit, Alan Tike claimed the name Detonator would live forever).

In the new driver, along with the traditional bug fixes and addition of new features, the compilers of vertex and pixel shaders have been significantly revised, which should increase the rendering speed and thus should not affect the image quality. It's just the new algorithm for handling the pixel and vertex programs that determined the change of NVIDIA drivers name. So you can easily forget about the Detonator, so often criticized recently =). The new drivers are dubbed ForceWare, and currently the only WHQL-certified version available is 52.16. In the theoretical part of our review, we'll also consider what NVIDIA programmers actually revised in terms of the driver operation, which required a change in the name. Although there is a certain marketing trick of the company, but as practice tells, NVIDIA has every reason for that.

The NVIDIA ForceWare v52.16 driver

The situation with shaders in the NV3x is not at all that straightforward as it may seem at first glance. It would be a delusion to assume that the root is solely in the hardware part. It is also rooted in the architecture specifics of NVIDIA cards which use the 32-bit floating-point operation precision. ATI boards in turn perform all the computations at 24-bit precision minimally allowed by the DirectX 9.0 specifications. But it would be also wrong to claim that NVIDIA cards built on the CineFX architecture perform floating-point operations at particularly the 32-bit precision. In fact, the architecture of NVIDIA boards of the FX family is more flexible than those built on ATI's DirectX 9.0 chips and allows switching between the resource-intensive 32-bit precision and the less demanding "cut-by-half" floating-point operation precision, i.e. 16-bit. It may also include 12-bit (integer) precision.

Of course, the 32-bit precision requires much more computational operations than the 24-bit or, even more, the 16-bit floating-point precision. It's just the 32-bit precision that in most cases was implemented in NVIDIA drivers. Certainly, ATI's boards using the strictly fixed 24-bit accuracy were able showing much higher performance level in applications intensely using pixel programs of version 2.0. What do DirectX 9.0 specifications tell regarding that? They tell the floating-point precision should at least equal 24 bits per color channel, that is ATI chips fully meet the DirectX 9.0 specifications, and NVIDIA definitely surpasses that level through using the 32-bit precision to the prejudice of those precious score points and FPS scores in games and benchmarks. The company's attempt to switch the chip into the 16-bit precision mode was regarded quite ambiguously and ended up in accusing NVIDIA of fraud.

However, in our view, the moves of the Californian company in this case are quite understandable: at that, odds are in favor of ATI cards. But why has NVIDIA anyway made its FX chips the way we see them today and not followed ATI's route introducing the rigidly fixed 24-bit precision? In that case, the companies would have been competing at pushing up the clock speeds of their chips and memory modules and producing various modifications of architecturally the same boards (which, in fact, is being done successfully, but then the cards would have been indeed on par). The thing is - NVIDIA, being confident in its strength developed an architecture of the GeForce FX family expecting the programmers would make use of their proprietary Cg language (more adapted to NVIDIA cards) in writing shader programs. There's no wonder about that, actually - that time the company was strong enough and had every reason for the confidence.

And, as we remember it quite well, along with the advertising of potentials of the chips there was a hype about unprecedented programmability level and freedom for programmers in writing the shader program code for CineFX NVIDIA cards. However, practice showed that programming FX chips proved to be quite complicated and laborious a task. ATI chips appeared to be much easier to execute the code with the Microsoft HLSL (High Level Shader Language) compiler at 24-bit precision. That is, ATI chips run faster partly due to the fact that most shader programs would be written just with the standard Microsoft compiler, but NVIDIA staked on its own joint development, for which the company forfeited in the end, as we all can see that. Of course, there are purely hardware-related problems about NVIDIA's GeForce FX chips, but the issue of floating-point precision adds more headaches to NVIDIA.

So, what really matters to the end user? The end user who simply plays the most recent DirectX 9.0 games (whose number is not going to be that great for the moment, but things are anyway getting to the better, which is nice) absolutely doesn't care how the code is complied, what floating-point precision it offers etc. =). What really matters to the end user is the quality of the image displayed. If the quality doesn't drop and the speed goes up, why not to use the 16-bit precision? But here a subjective matter of quality assessment rises. So, this should be up to the users themselves to decide.

Clearly, NVIDIA has got to do something about the drivers. The company's "energetic moves" in embedding the 16-bit floating-point precision were taken by the public, softly speaking, without enthusiasm, which is quite logical and anticipated, so other ways to solve the problem had to be found. The first stage in solving the problem was the release of new ForceWare driver series. We'll be talking about the optimizations which don't deteriorate the image quality in more detail in what follows.

First off, let's talk about the purely "cosmetic" modifications.

At first glance, they are not so noticeable. The design of the menus hasn't undergone much changes since the times of the 40th series. There isn't any conceptually new approach in building the menus, which is not needed in fact, because the author of the review is absolutely content with the 40th series way of the menu layout and GUI.

The antialiasing and anisotropic filtering settings are gathered in the same section and offer 3 quality regimes:

- "High Performance";

- "Performance";

- "Quality".

This is not new, actually.

The standard sections haven't been amended.

Now you can choose the desired resolution, but no just select ready pre-sets. This option is indeed a real value.

But the nView section does offer quite substantial changes. The major innovation of nView 3.0 is about the use of the "gridlines" feature which allows partitioning the screen more effectively and conveniently into several independent zones. If you are a lucky owner of a Quadro professional video card, the number of such zones can be up to 9, while GeForce family cards offered merely 4, which in our view is more than enough.

Also, official support for the most recent chips GeForce 5700, GeForce FX 5700 Ultra and GeForce FX 5950 Ultra has been introduced.

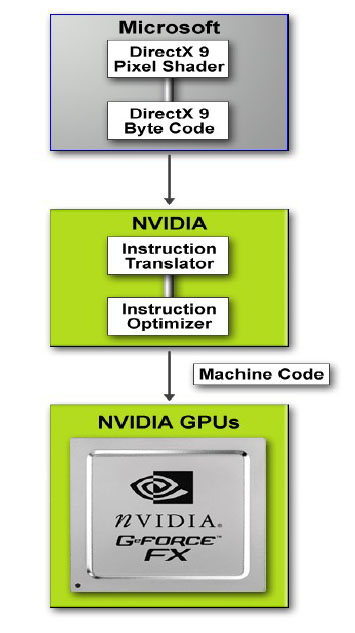

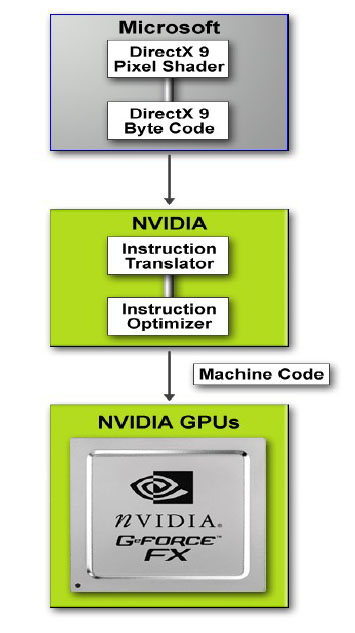

Of course, we were interested in other innovations of the driver. Namely, the revised "unified" compiler of DX 9.0 code. The idea behind it is that the compiler while receiving instructions in the form of a simple DirectX 9.0 code interprets them for the chip, re-builds the order and structure of commands in real time in order to provide the GeForce FX chip with the re-worked code which would execute faster than if the commands are fed to the graphics chip "as is". Visually, this is illustrated in the following diagram:

Potentially, the compiler can reduce the number of passes required by the code that comes directly out of the API. In the end, this may positively affect the accelerator performance in handling pixel and shader programs. Note also that the image quality does not suffer from that since the optimizations do not affect the floating-point precision settings - they simply rebuild the order and structure of commands, which logically can't deteriorate the image quality because the requested shader is displayed anyway. Another thing is that the accelerator will always handle the code more amenable for the architecture of FX chips.

Don't think that such an idea of optimization hasn't come into the heads of programmers at NVIDIA. The fundamentals of a "unified compiler" were laid down still in Detonator 44.12, but the idea was not brought to perfection, so the polishing and finishing of a real working technology was left to further drivers of the ForceWare series.

Optimization of the code that enters the GPU is no doubt a good idea. NVIDIA programmers deserve a real credit for that, but the matter of floating-point precision is still open. Since the most recent version of the Microsoft High Level Shader Language allows the programmers to choose a floating-point precision in writing the code, NVIDIA positions the possibility of GeForce FX chip architecture to choose one of three floating-point precision modes (the already mentioned 32-bit precision, 16-bit and the 12-bit integer mode) as an advantage of its chips. This is indeed difficult to negate: why should the 32-bit or 24-bit precision (for the case of ATI boards) be always used, if it is possible to restrict to e.g. the 16-bit floating-point precision for some specific tasks that do not require increased precision. Another thing is that it's not always possible to choose the right precision for particular tasks. In this case, the programmers are expected to spend much more effort in writing and optimizing the code.

Next

|

Content: |

|

|

- ForceWare 52.16 Driver

- ASUS V9950 (FX5900) Video Card

- Sapphire Radeon 9800 Video Card

- Synthetic benchmarks

- Gaming benchmarks

- Image quality

-

Final Words

|

Top Stories: |

|

|

|

MoBo:

|

|

|

|

VGA Card:

|

|

|

|

CPU & Memory:

|

|

|